Building A Video Synthesizer in Python

Video synthesizers are devices that create visual signal from an audio input. They’ve got a long history, since at least the 60’s.

Lee Harrison started building a video synthesizer called Scanimate that worked from voice input to animate a face in the 1960s.

More recently, Critter and Guitari have built a bunch of insane video synthesizers that react to live music performance. They’re meant to enhance and automatically accompany a performance.

Here’s one of their video syntheseizers, called the Rhythm Scope, in action:

Today, we’ll write a basic video synthesizer in Python, using aubio for Onset detection, and Pygame to display our graphics visually. We’ll end up with a program ready to be played out through a projector.

Since I don’t have a drum set (yet!), I’ll use my guitar to drive onsets and draw on the screen.

Before We Begin

You’ll need to have a way to get audio into your program.

For my video, I used a cheap Rocksmith USB cable. But if you’ve got an audio interface for your computer, feel free to use that instead, hooked up to your instrument of choice.

If you don’t have a guitar to use as an input, you can also just use your computer’s mic, and either make loud noises, or play drums, like you see in the Critter and Guitari video above. We’ll be using Onset detection to draw our circles, so it won’t really matter where the sound comes from, as long as it’s loud enough to trigger an onset.

(And if you don’t have a guitar, why the hell not?! You can get one of these along with this, and be playing in a few days.)

Architecture of A Video Synthesizer in Python

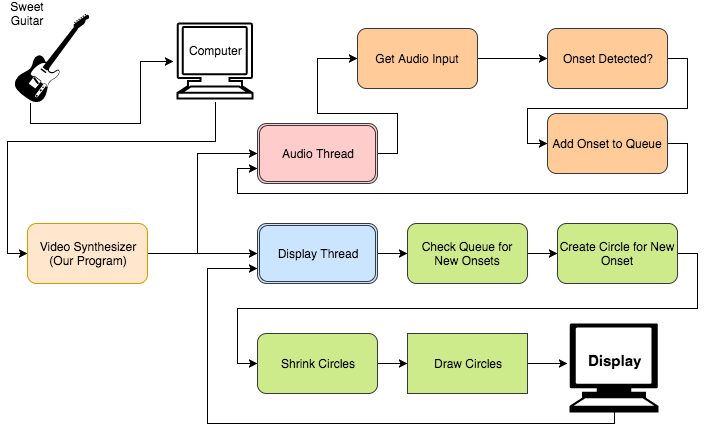

With visual effects accompanying a performance, we’ll want the least amount of latency as possible. We don’t want to be waiting for each set of audio frames to come in before we begin drawing to the screen.

Because of this, we use threads in this program, one to take in our audio and detect our onsets, and another to do the drawing to the screen of our circles.

We use the PyAudio and aubio libraries, to first read in audio input from our computer, and then analyze a set of frames for an onset. Once we’ve detected an onset, we then push a new value on to a queue, for the other thread to read.

In our display thread, we loop infinitely, first checking if there’s a new onset detected on the queue, and then creating a new circle if there is. We then shrink and draw our circles to the screen.

With this basic flow, we’ve got the start of a video synthesizer, ready to react in real time to the audio coming in from our computer.

Writing the Code

The first thing we’ll want to do is allow our users to select the proper input device to begin reading audio from. On my personal computer, I have 7 different audio inputs to choose from.

So it’s important that after we import our libraries, we open and describe what the available inputs are before proceeding:

import pyaudio

import sys

import numpy as np

import aubio

import pygame

import random

from threading import Thread

import queue

import time

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("-input", required=False, type=int, help="Audio Input Device")

parser.add_argument("-f", action="store_true", help="Run in Fullscreen Mode")

args = parser.parse_args()

if not args.input:

print("No input device specified. Printing list of input devices now: ")

p = pyaudio.PyAudio()

for i in range(p.get_device_count()):

print("Device number (%i): %s" % (i, p.get_device_info_by_index(i).get('name')))

print("Run this program with -input 1, or the number of the input you'd like to use.")

exit()

With this argparse setup, our users get a list of the audio inputs, and are given the option to specify one from the command line. So your first time running it might look like this:

$ python3 video-synthesizer.py

Device number (0): HDA Intel PCH: ALC1150 Analog (hw:0,0)

Device number (1): HDA Intel PCH: ALC1150 Digital (hw:0,1)

Device number (2): HDA Intel PCH: ALC1150 Alt Analog (hw:0,2)

Device number (3): HDA NVidia: HDMI 0 (hw:1,3)

Device number (4): HDA NVidia: HDMI 1 (hw:1,7)

Device number (5): HDA NVidia: HDMI 2 (hw:1,8)

Device number (6): HDA NVidia: HDMI 3 (hw:1,9)

Device number (7): HD Pro Webcam C920: USB Audio (hw:2,0)

Device number (8): Rocksmith USB

Run this program with -input 1, or the number of the input you'd like to use.

$ python3 video-synthesizer.py -input 8

With this, we can start up our program, using the Rocksmith USB as our input.

Next, we can initialize Pygame. If we plan on using a projector for our real time performance, we should run Pygame in fullscreen mode. Otherwise, we should run in a windowed mode for debug and development purposes.

You’ll see I added a flag to our argparse parser above, if we pass in -f from the command line we’ll run fullscreen, otherwise windowed. The code to handle this flag looks like this:

pygame.init()

if args.f:

# run in fullscreen

screenWidth, screenHeight = 1024, 768

screen = pygame.display.set_mode((screenWidth, screenHeight), pygame.FULLSCREEN | pygame.HWSURFACE | pygame.DOUBLEBUF)

else:

# run in window

screenWidth, screenHeight = 800, 800

screen = pygame.display.set_mode((screenWidth, screenHeight))

Next, we define our Circle object, and the set of colors we’ll use to draw our circles with, along with a list to store all circles in.

white = (255, 255, 255)

black = (0, 0, 0)

class Circle(object):

def __init__(self, x, y, color, size):

self.x = x

self.y = y

self.color = color

self.size = size

def shrink(self):

self.size -= 3

colors = [(229, 244, 227), (93, 169, 233), (0, 63, 145), (255, 255, 255), (109, 50, 109)]

circleList = []

You’ll notice that the Circle object has one method on itself, where it can shrink. We shrink the circle by 3 pixels on every loop.

Later, we’ll pop circles off our main list if they get below one in size.

Next, we can add in our audio setup, initializing PyAudio and our aubio Onset detector:

# initialise pyaudio

p = pyaudio.PyAudio()

clock = pygame.time.Clock()

# open stream

buffer_size = 4096 # needed to change this to get undistorted audio

pyaudio_format = pyaudio.paFloat32

n_channels = 1

samplerate = 44100

stream = p.open(format=pyaudio_format,

channels=n_channels,

rate=samplerate,

input=True,

input_device_index=args.input,

frames_per_buffer=buffer_size)

time.sleep(1)

# setup onset detector

tolerance = 0.8

win_s = 4096 # fft size

hop_s = buffer_size // 2 # hop size

onset = aubio.onset("default", win_s, hop_s, samplerate)

q = queue.Queue()

These values are mostly guesses, and I’ve used aubio’s default onset detector. You might want to play around with the window, hop size, and tolerance to get a better fit for your data and your audio.

One thing to note about the PyAudio stream we open up. I had to set my buffer size to be a fairly large 4096 in Linux in order to get good audio quality. If it was a number below that, I’d get a bit of static coming out of my input.

Finally, we create our queue that we’ll use to pass messages from the audio thread back to the main, Pygame thread.

Drawing Our Onsets

We use a very basic example to begin drawing from our onsets. We create a main Pygame drawing loop that clears the screen, and then iterates over each of the remaining circles in our list.

We shrink each circle on every loop, and also check for any new onsets in the queue. If there are any, we add it to the list.

def draw_pygame():

running = True

while running:

key = pygame.key.get_pressed()

if key[pygame.K_q]:

running = False

for event in pygame.event.get():

if event.type == pygame.QUIT:

running = False

if not q.empty():

b = q.get()

newCircle = Circle(random.randint(0, screenWidth), random.randint(0, screenHeight),

random.choice(colors), 700)

circleList.append(newCircle)

screen.fill(black)

for place, circle in enumerate(circleList):

if circle.size < 1:

circleList.pop(place)

else:

pygame.draw.circle(screen, circle.color, (circle.x, circle.y), circle.size)

circle.shrink()

pygame.display.flip()

clock.tick(90)

Creating Our Audio Thread

Our audio and onset detection thread is pretty straightforward once we’ve got the infrastructure set up for our drawing.

We merely loop over reading the audio buffer, and running it through our onset detector. If there’s a new onset detected in the current buffer, we create a new object in the queue, and wait for the main drawing thread to pick it up.

This can happen in it’s own thread, regardless of the current state of the drawing part of the program.

def get_onsets():

while True:

try:

buffer_size = 2048 # needed to change this to get undistorted audio

audiobuffer = stream.read(buffer_size, exception_on_overflow=False)

signal = np.fromstring(audiobuffer, dtype=np.float32)

if onset(signal):

q.put(True)

except KeyboardInterrupt:

print("*** Ctrl+C pressed, exiting")

break

t = Thread(target=get_onsets, args=())

t.daemon = True

t.start()

draw_pygame()

stream.stop_stream()

stream.close()

pygame.display.quit()

With this, our code is ready to go, and creates the effect we can see in the YouTube video.

Next Steps

aubio has pitch detection, and that’s the most obvious next thing to program. Making some visual feedback based upon which note is being played on your guitar.

But besides that, we can also make use of the FFT to break down the signal we’re getting from the guitar itself, to make a more interactive visual. Onsets are really just the tip of the iceberg.

Critter and Guitari have done a really good job here too, because they’ve got a new product, called the ETC, that allows for these sorts of things, and even better still, is programmable in Python and Pygame!

Judging from their demo videos, they’ve really nailed the video synthesizer in this little box.

What can you make? I’d love to see what you build and how you play! Reach out to me on Twitter or Instagram with any of your creations.

As always, the code for this post is available at Github.

If you’re interested in more projects like these, please sign up for my newsletter or create an account here on Make Art with Python. You’ll get the first three chapters of my new book free when you do, and you’ll help me continue making projects like these.